Overview:

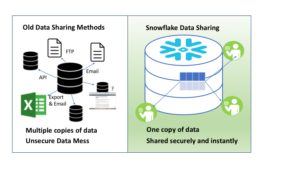

I have been working with the Snowflake Data Cloud since it was just an Analytical RDBMS. Since the beginning of 2018, Snowflake has been pretty fun to work with as a data professional and data entrepreneur. It allows data professionals amazing flexible data processing power in the cloud. The key to a successful Snowflake deployment is setting up security and account optimizations correctly from the beginning. In this article, we will discuss the 'CREATE WAREHOUSE' default settings.

Snowflake Cost and Workload Optimization is the Key

After analyzing hundreds of Snowflake customer accounts, we found key processes to optimize Snowflake for computing and storage costs. The best way to successfully deploy Snowflake is to ensure you set it up for cost and workload optimization.

The Snowflake default "create warehouse" settings are not optimized to limit costs. That is why we built our Snoptimizer service (Snowflake Cost Optimization Service) to automatically and easily optimize your Snowflake Account(s). There is no other way to continuously optimize queries and costs so your Snowflake Cloud Data solution runs as efficiently as possible.

Let's quickly review how Snowflake Accounts' default settings are currently set.

Here is the default screen that comes up when I click +Warehouse in the Classic Console.

Create Warehouse-Default Options for the Classic Console

Okay, for those already in Snowsight (aka Preview App), here is the default screen within Snowsight (or Preview App) - It is nearly identical.

Create Warehouse Default Options for Snowsight

So let's dig into the default settings for these Web UIs that will be there if you just choose a name and click "Create Warehouse" - Let's further evaluate what happens with our Snowflake Compute if you leave the default Create Warehouse settings.

These default settings will establish the initial configuration for our Snowflake Compute. By understanding the defaults, we can determine if any changes are needed to optimize performance, security, cost, or other factors that are important for our specific use case. The defaults are designed to work out of the box for most general-purpose workloads but rarely meet every need.

Create Warehouse - Default Setting #1

Size (Really Warehouse of Compute Size): X-Large is set. I assume you understand how Snowflake Compute works and know the Snowflake Warehouse T-Shirt Sizes. Notice that the default setting is X-Large Warehouse vs smaller Warehouse settings of (XS, S, M, L) T-shirt default setting. This defaults to the same setting for both the Classic Console and Snowsight (the Preview App).

Create Warehouse - Default Setting #2

Maximum Clusters: 2

While enabling clustering by default makes sense if you want it enabled, it still has significant cost implications. It assumes the data cloud customer wants to launch a second cluster and pay more for it on this Snowflake warehouse if it has a certain level of queued statements. Sticking with the XL settings - duplicating a cluster has serious cost consequences of $X/hr.

This setting only applies to the Classic Console. It also is only set if you have Enterprise Edition or higher since Standard Edition does not offer Clustering.

Create Warehouse - Default Setting #3

Minimum Clusters: 1

This is only the default setting for the Classic Console.

Create Warehouse - Default Setting #4

Scaling Policy: Standard This setting is hard to rate but the truth is if you are a cost-conscious customer you would want to change this to "Economy" by default and not have it set as "Standard". The optimal level though is that your 2nd cluster which is set by Default will kick in as soon as Queuing happens on your Snowflake warehouse versus not launching a 2nd cluster until Snowflake thinks that it has a minimum of 6 minutes of work that 2nd cluster would have to perform.

This is only the default setting for the Classic Console but when you toggle the "Multi-cluster Warehouse" on Snowsight setting this defaults to "Standard" vs. defaulting to "Economy".

Create Warehouse - Default Setting #5

Auto Suspend: 10 minutes For many warehouses, especially ELT/ETL warehouses, this default setting is typically too high. Loading warehouses that run on regular intervals rarely need such a high cache setting. For example, a loading warehouse that runs on a schedule never needs extensive caching. Our Snoptimizer service finds inefficient and potentially costly settings like this.

For a loading warehouse, Snoptimizer immediately saves 599 seconds of computing time for every interval. As discussed in the Snowflake Warehouse Best Practice Auto Suspend article, this can significantly reduce costs, especially for larger load warehouses.

We talk more about optimizing warehouse settings in this article but reducing this setting can substantially lower expenses with no impact on performance.

NOTE: This defaults to the same setting for both the Classic Console and Snowsight (the Preview App).

Snowflake Create Warehouse - Default Setting #6

Auto Resume Checkbox: Checked by Default. This setting is fine as is. I do not recall the last time I created a warehouse without "Auto Resume" checked by default. Snowflake's ability to resume a query in milliseconds or seconds once executed brings automated warehouse computing to user needs. This is revolutionary and useful!

NOTE: This defaults to the same for both the Classic Console and Snowsight (the Preview App).

Snowflake Create Warehouse - Default Setting #7

Click "Create Warehouse": The Snowflake Warehouse is immediately started. This setting I do not prefer. I do not think it should immediately start to consume credits and go into the Running state. It is too easy for a new SYSADMIN to start a warehouse they do not need. The default setting before this is already set to "Resume". The Snowflake Warehouse will already resume when a job is sent to it so there is no need to automatically start.

NOTE: This defaults to the same execution for both the Classic Console and Snowsight (the Preview App).

One last thing...

As an extra bonus, check the code below in SQL code for those of you who just do not do "GUI".

Let's go to the Snowflake CREATE WAREHOUSE code to see what is happening...

DEFAULT SETTINGS:

CREATE WAREHOUSE XLARGE_BY_DEFAULT WITH WAREHOUSE_SIZE = 'XLARGE' WAREHOUSE_TYPE = 'STANDARD' AUTO_SUSPEND = 600 AUTO_RESUME = TRUE MIN_CLUSTER_COUNT = 1 MAX_CLUSTER_COUNT = 2 SCALING_POLICY = 'STANDARD' COMMENT = 'This sucker will consume a lot of credits fast';

Conclusion:

Snowflake default warehouse settings are not optimized for cost and workload. The default settings establish an X-Large warehouse, allow up to 2 clusters which increases costs, use a "Standard" scaling policy and 10-minute auto-suspend, and immediately start the warehouse upon creation. These defaults work for general use but rarely meet specific needs. Optimizing settings can significantly reduce costs with no impact on performance.