Introduction:

Today’s article provides a recap of the Snowflake Summit 2022, including the key feature announcements and innovations. We highlight the major takeaways from the event and the outline of Snowflake's position as a full-stack business solution environment capable of creating business applications.

We also include a more in-depth discussion of Snowflake's seven pillars of innovation, which include all data, all workloads, global, self-managed, programmable, marketplace, and governed.

Snowflake Summit 2022 Recap from a Snowflake Data Superhero:

If you were unable to attend the Snowflake Summit, or missed any part of the Snowflake Summit Opening Keynote, here is a recap of the most important feature announcements.

Here are my top 20 announcements, mostly in chronological order of when they were announced. It was overwhelming to keep up with the number of announcements this week!

Cost Governance:

1. The concept of New Resource Groups has been announced. It allows you to combine all kinds of Snowflake data objects to monitor their resource usage. This is a huge improvement since Resource Monitors were previously quite primitive.

2. The concept of Budgets that you can track against. Resource Groups and Budgets coming into Private Preview in the next few weeks.

3. More Usage Metrics are being made available as well for SnowPros like us to use or Monitoring tools. This is important since many enterprise businesses were looking for this.

Replication Improvements on SnowGrid:

4. Account Level Object Replication: Snowflake previously allowed only data replication and not other account-type objects. However, now all objects that are not just data can supposedly be replicated as well.

5. Pipeline Replication and Pipeline Failover: Now, stages and pipes can be replicated. According to Kleinerman, this feature will be available soon in Preview.

Data Management and Governance Improvements:

6. The combination of tags and policies. You can now do — Private Preview now and will go into public preview very soon.

Expanding External Table Support and Native Iceberg Tables:

7. We will soon have support for external tables in Apache Iceberg. Keep in mind, however, that external tables are read-only and have certain limitations. Take a look at what Snowflake did in #9 below.

8. Snowflake is broadening its abilities to manage on-premises data by partnering with storage vendors Dell Technologies and Pure Storage. The integration is anticipated to be available in a private preview in the coming weeks.

9. We are excited to announce that Snowflake now fully supports Iceberg tables, which means these tables can now support replication, time travel, and other standard table features. This enhancement will greatly improve the ease of use within a Data Lake conceptual deployment. For any further inquiries or assistance, our expert in this area is Polita Paulus.

Improved Streaming Data Pipeline Support:

10. New Streaming Data Pipelines. The main innovation is the capability to create a concept of materialized tables. Now you can ingest streaming data as row sets. Expert in this area: Tyler Akidau

- Funny—I presented on Snowflake's Kafka connector at Snowflake Summit 2019. Now it feels like ancient history.

Application Development Disruption with Streamlit and Native Apps:

11. Low code data application development via Streamlit: The combination of this and the Native Application Framework allows Snowflake to disrupt the entire Application Development environment. I would watch closely for how this evolves. It's still very early but this is super interesting.

12. Native Application Framework: I've been working with this tool for about three months and I find it to be a real game-changer. It empowers data professionals like us to create Data Apps, share them on a marketplace, and even monetize them. This technology is a significant step forward for Snowflake and its new branding.

Expanded SnowPark and Python Support:

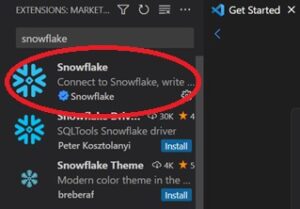

13. Python Support in the Snowflake Data Cloud. More importantly, this is a major move to make it much easier for all “data constituents” to be able to work seamlessly within Snowflake for all workloads including Machine Learning. Snowflake has been making efforts to simplify the process of running data scientist workloads within its platform. This is an ongoing endeavor that aims to provide a more seamless experience.

14. Snowflake Python Worksheets. This statement is related to the previous announcement. It enables data scientists, who are used to Jupyter notebooks, to more easily work in a fully integrated environment within Snowflake.

New Workloads. Cybersecurity and OLTP! boom!

15. CYBERSECURITY. This was announced a while back, but it is being emphasized again to ensure completeness.

16. UNISTORE. OLTP type support based on Snowflake’s Hybrid Table features. This was one of the biggest announcements by far. Snowflake now is entering a much larger part of data and application workloads by extending its capabilities beyond olap [big data. online analytical processing] into OLTP space which still is dominated by Oracle, SQL Server, mysql, postgresql, etc. This is a significant step that positions Snowflake as a comprehensive, integrated data cloud solution for all data and workloads.

Additional Improvements:

17. Snowflake Overall Data Cloud Performance Improvements. This is great, but with all the other "more transformative" announcements, I'll group this together. The performance improvements include enhancements to AWS capabilities, as well as increased power per credit through internal optimizations.

18. Large Memory Instances. They did this to handle more data science workloads, demonstrating Snowflake's ongoing commitment to meeting customers' changing needs.

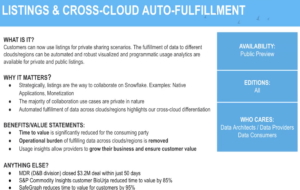

19. Data Marketplace Improvements. The Marketplace is one of my favorite things about Snowflake. They mostly announced incremental changes.

Quick “Top 3” Takeaways for me from Snowflake Summit 2022:

- Snowflake is positioning itself now way beyond a cloud database or data warehouse. It now is defining itself as a full-stack business solution environment capable of creating business applications.

- Snowflake is emphasizing it is not just data but that it can handle “all workloads” – Machine Learning, Traditional Data Workloads, Data Warehouse, Data Lake, and Data Applications and it now has a Native App and Streamlit Development toolset.

- Snowflake is expanding wherever it needs to be in order to be a full data anywhere anytime data cloud. The push into better streams of data pipelines from Kafka, etc., and the new on-prem connectors allow Snowflake to take over more and more customer data cloud needs.

Snowflake at a very high level wants to:

- Disrupt Data Analytics

- Disrupt Data Collaboration

- Disrupt Data Application Development

Want more recap beyond just the features?

Here is a more in-depth take on the Keynote 7 Pillars that were mentioned:

Snowflake-related Growth Stats Summary:

2019: 938 Employees

2022 at Summit: 3992 Employees

2019: 948 Customers

2022 at Summit: 5944 Customers

2019: 96M

2022 at Summit: 1.2B

Snowflake’s 7 Pillars of Innovations:

Let’s go through the 7 pillars of snowflake innovations:

- All Workloads – Snowflake is heavily focusing on creating an integrated platform that can handle all types of data and workloads, including ML/AI workloads through SnowPark. Their original architecture's separation of computing and storage is still a key factor in the platform's power. This all-inclusive approach to workloads is a defining characteristic of Snowflake's current direction.

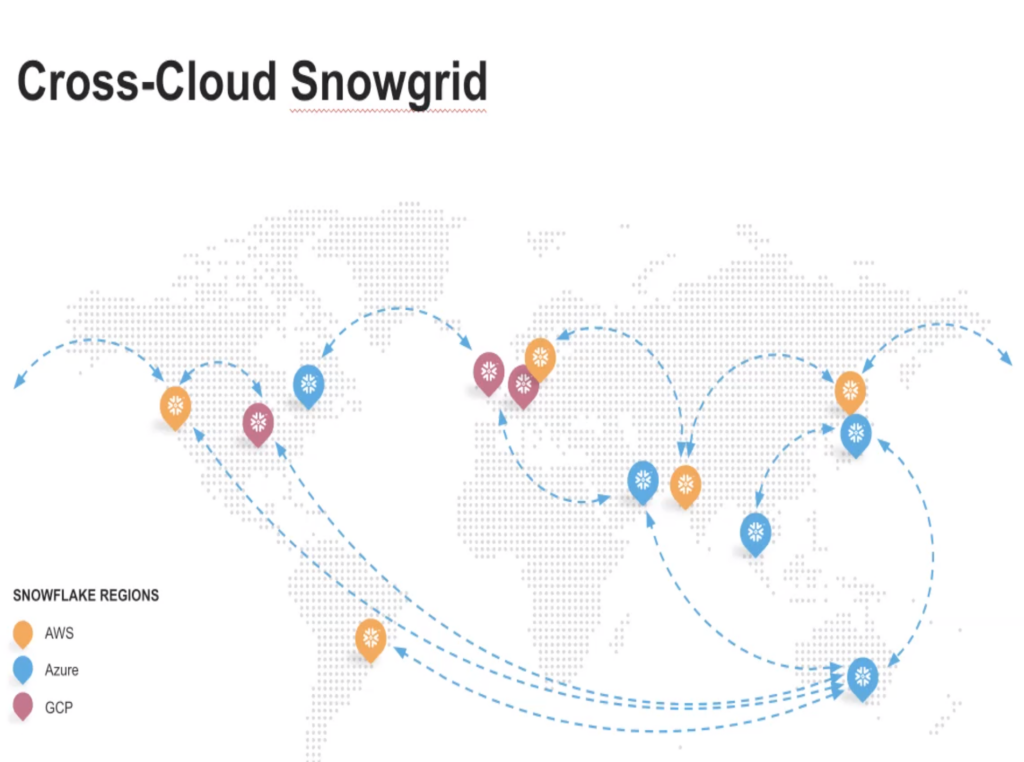

- Global – Snowflake, which is based on SnowGrid, is a fully global data cloud platform. Currently, Snowflake is deployed in over 30 cloud regions across the three main cloud providers. Snowflake aims to provide a unified global experience with full replication and failover to multiple regions, thanks to its unique architecture of SnowGrid.

- Self-managed – At Snowflake, we are committed to ensuring that our platform remains user-friendly and straightforward to use. This is our priority and we continue to focus on it.

- Programmable – Snowflake can now be programmed using not only SQL, Javascript, Java, and Scala, but also Python and its preferred libraries. This is where Streamlit comes in.

- Marketplace – Snowflake emphasizes its continued focus on building more and more functionality on the Snowflake Marketplace (rebranded now since it will contain both native apps as well as data shares). Snowflake continues to make the integrated marketplace as easy as possible to share data and data applications.

- Governed – Snowflake stated that they have a continuous heavy focus on data security and governance.

- All Data – Snowflake emphasizes that it can handle not only structured and semi-structured data, but also unstructured data of any scale.

Conclusion:

We hope you found this article useful!

Today’s article recapped Snowflake Summit 2022, highlighting feature announcements and innovations. Snowflake is a full-stack business solution environment with seven pillars of innovation: all data, all workloads, global, self-managed, programmable, marketplace, and governed. We covered various topics such as cost governance, data management, external table support, and cybersecurity.

If you want more news regarding Snowflake and how to optimize your Snowflake accounts, be sure to check out our blog.