Introduction:

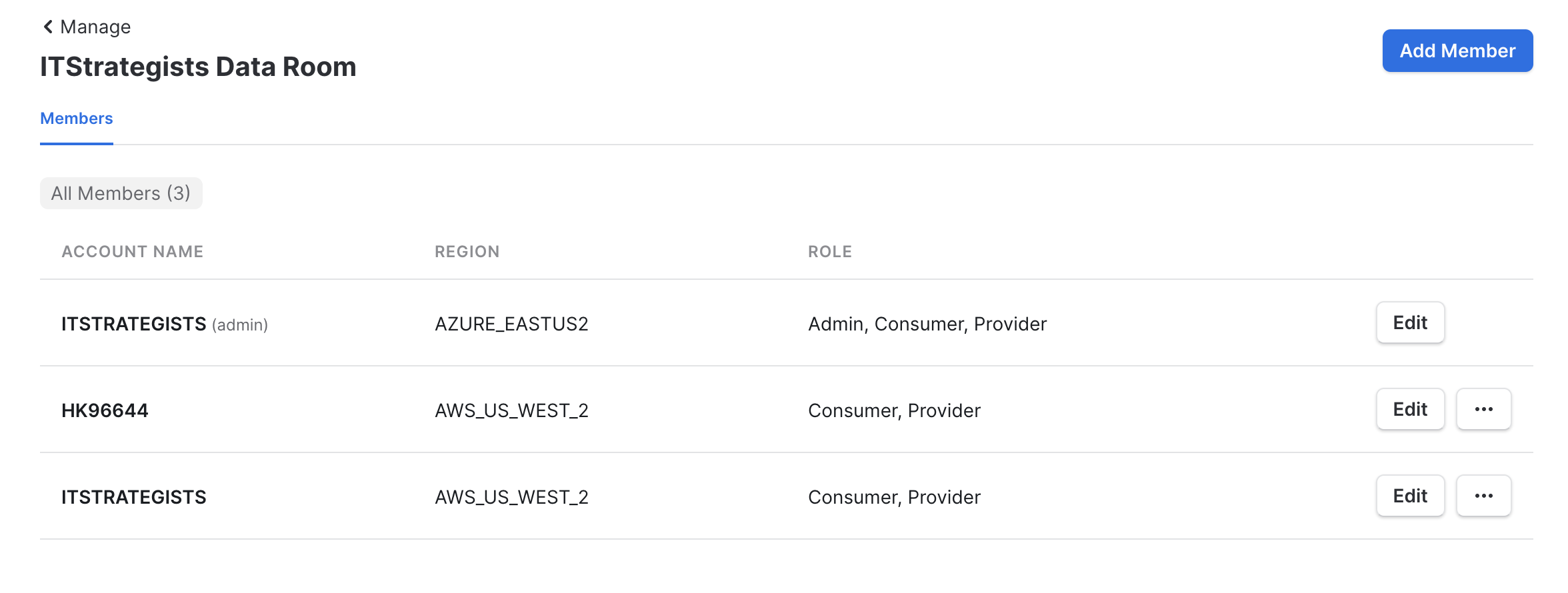

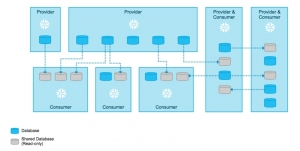

The Snowflake Data Marketplace is a cloud data exchange platform where organizations can discover, access, and share live, ready-to-query data sets in a secure, governed, and compliant manner.

Long gone are the days when consumers have to copy data, use APIs, or wait days, weeks, and sometimes even months to gain access to datasets. With Snowflake Data Marketplace, analysts around the world are getting the information they need to make important decisions for their businesses in a blink of an eye and the palm of their hands.

What is it and how does it work?

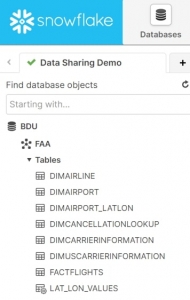

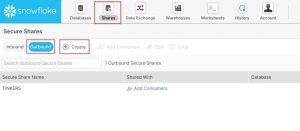

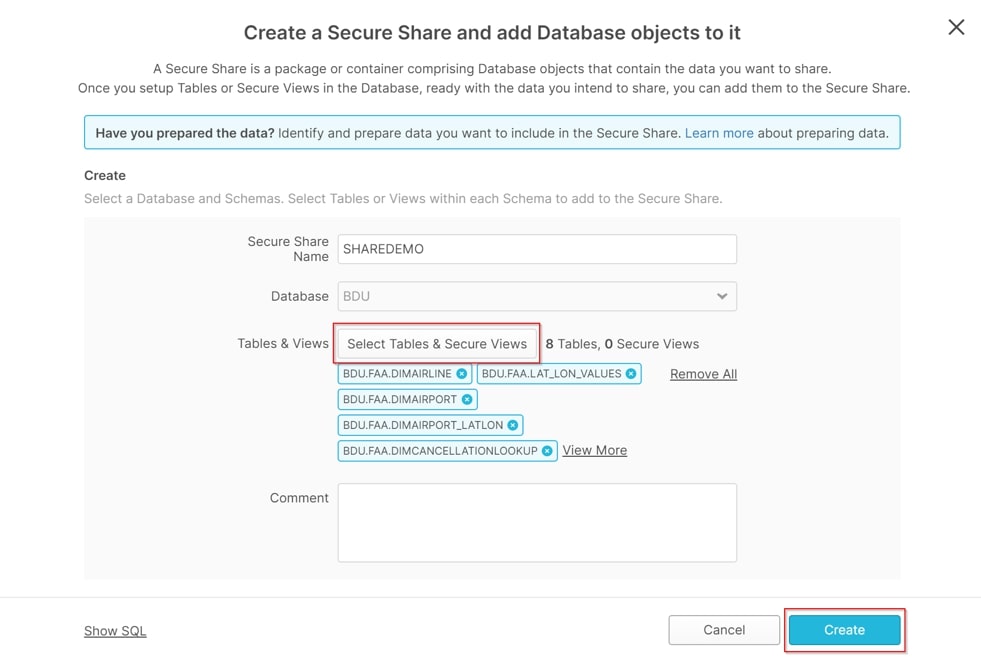

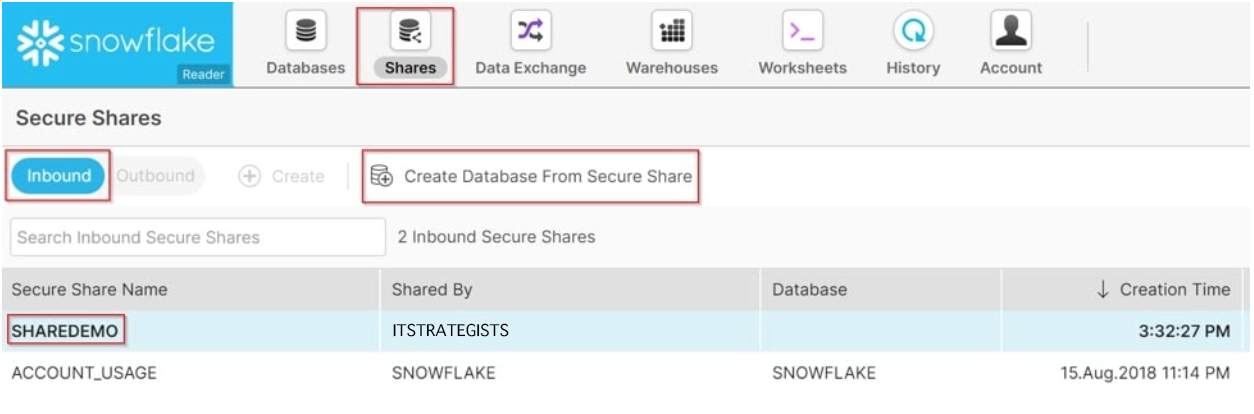

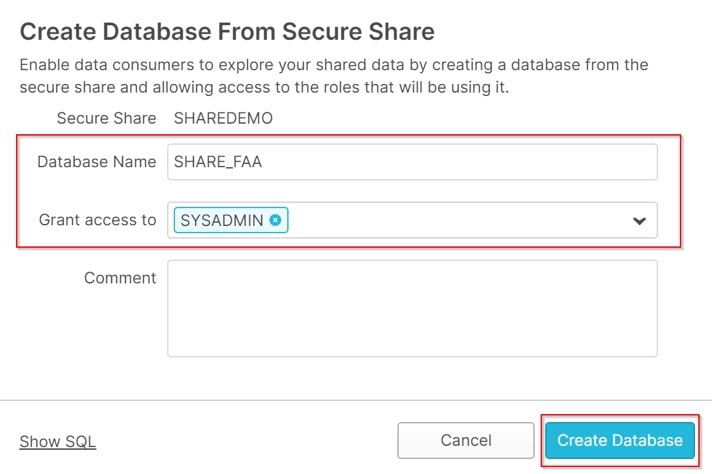

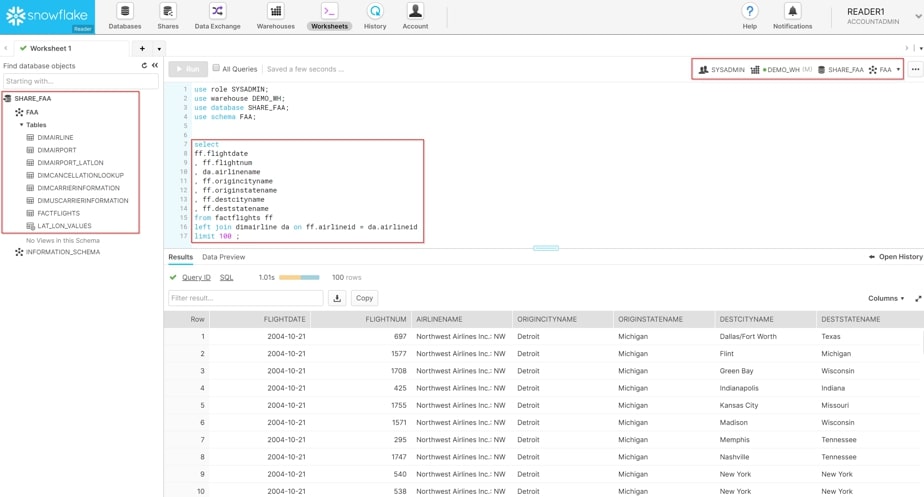

The Snowflake Data Marketplace provides access to live, query-ready data sets. It uses Snowflake's Secure Data Sharing to connect data providers and consumers. Currently, it offers access to 229 data sets. As a consumer, you can discover and access various third-party data sets. They will be directly available in your Snowflake account to query. There's no need to transform the data or spend time joining it with your own data. If you use multiple vendors for data, the Data Marketplace gives you a single source to access everything.

Why is this Marketplace important?

Companies can now securely and instantly share and access live, regulated data in real-time without copying or moving it. In the past, getting access to such data could take days, weeks, months, or even years. With the Data Marketplace, gaining access only takes a couple of minutes. Already over 2000 businesses have requested free access to key data sets in our marketplace. This is a gold mine for anyone who wants data-driven, on-demand access to live and ready-to-query data, and the best part is that it's available globally, across clouds.

There are significant benefits for both providers and consumers. However, three key points enable companies to unlock their true potential using the Data Marketplace:

Source Data Faster and More Easily

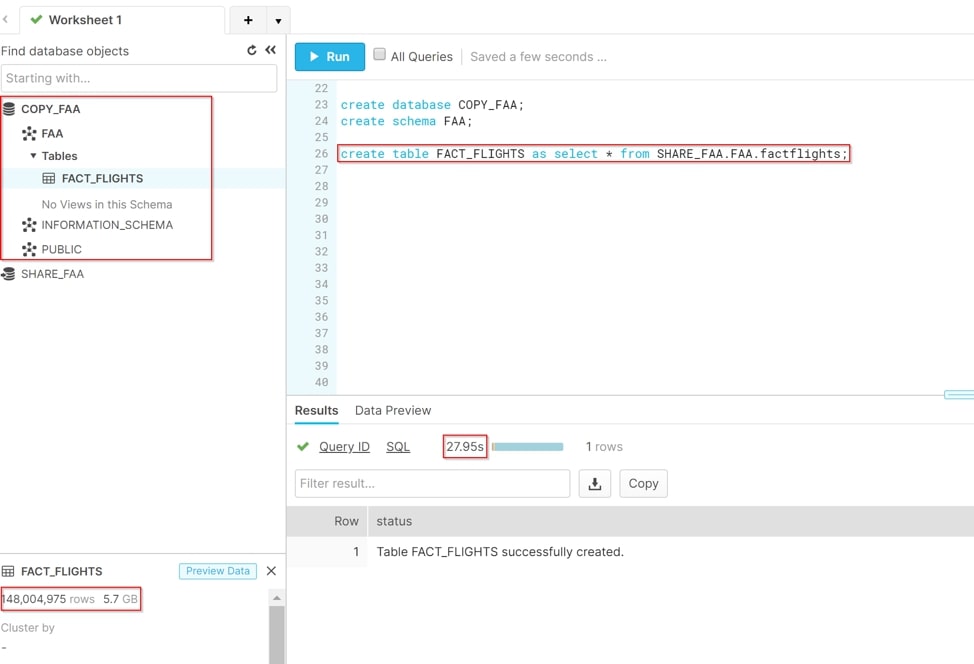

- As we said previously, using Snowflake Data Marketplace as a consumer allows users to avoid the risk and hassle of having to copy and migrate stale data. Instead, securely access live and governed shared data sets, and receive automatic updates in real-time.

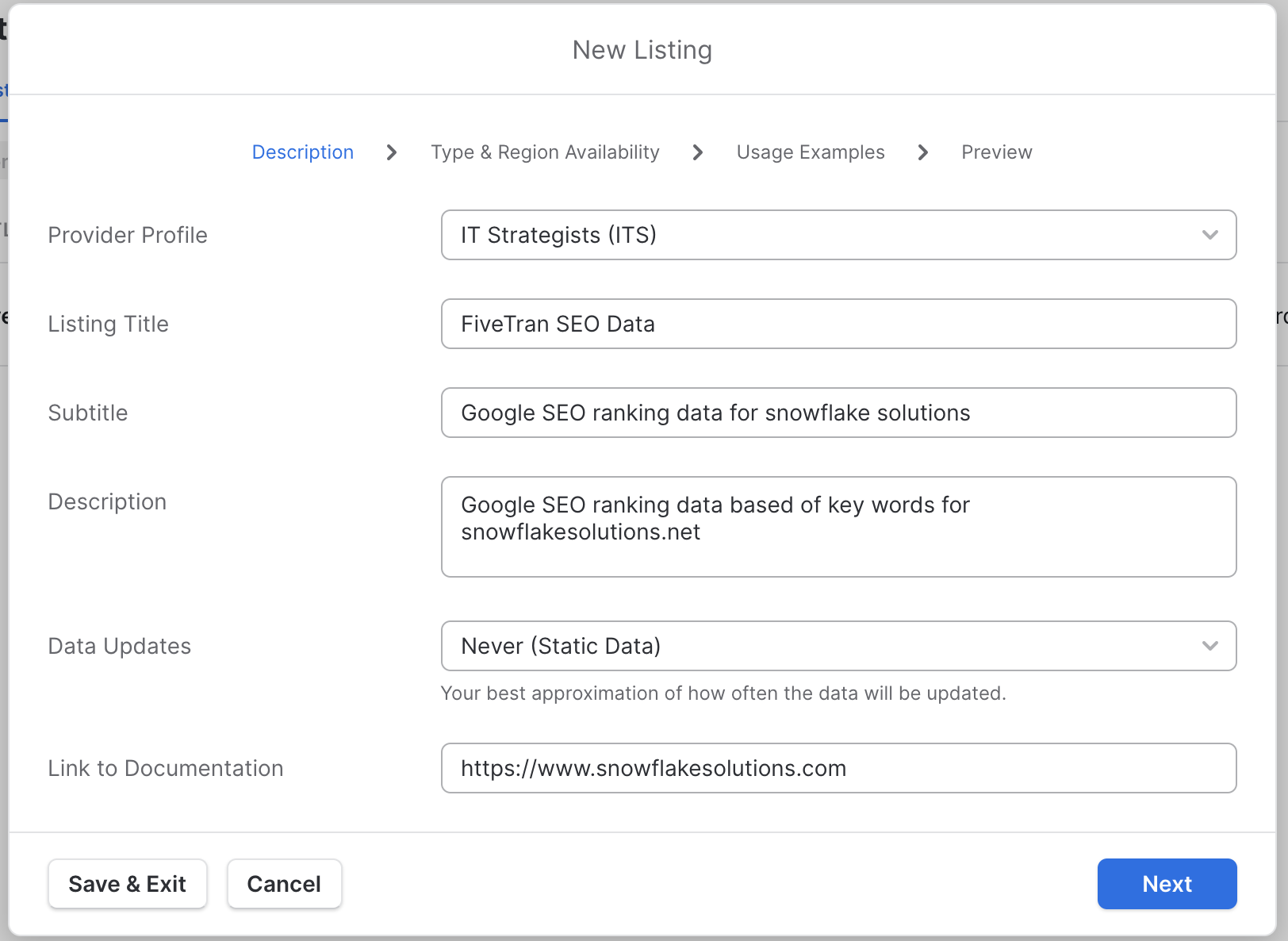

Monetize Your Data

- As a data provider on Snowflake's Data Marketplace, you can create new revenue streams by offering your governed data assets to thousands of potential Snowflake data consumers.

Reduce Analytics Costs

- Using this service eliminates the costs and effort of traditional data integration. Both consumers and providers can access shared data directly, securely, and compliantly from your Snowflake account. This streamlines data ingestion, pipelines, and transformation.

Learn more about Snowflake Data Marketplace

For more information on Snowflake’s Data Marketplace, visit the official website here.

If you're curious to see Snowflake's official Marketplace demo, check it out here.

Action Items after reading this article:

- Visit the Snowflake Data Marketplace official website.

- Check out Snowflake's official Marketplace demo.

- Learn more about accessing live, query-ready data sets through Snowflake's Data Marketplace.

- Find, try out, and purchase the data and applications you require to power innovative business solutions.

- Discover how data providers can create new revenue streams by offering governed data assets.

- Understand how the Data Marketplace reduces analytics costs by streamlining data ingestion, pipelines, and transformation.